As digital learning becomes the norm, maintaining academic integrity grows more complex.

With assessments moving online, traditional safeguards often fall short.

Artificial intelligence (AI) is emerging as a key tool in ensuring that educational standards remain credible, secure and fair.

From proctoring to personalised learning and bias detection, AI is expanding its role beyond efficiency—actively supporting integrity at every stage of the academic process.

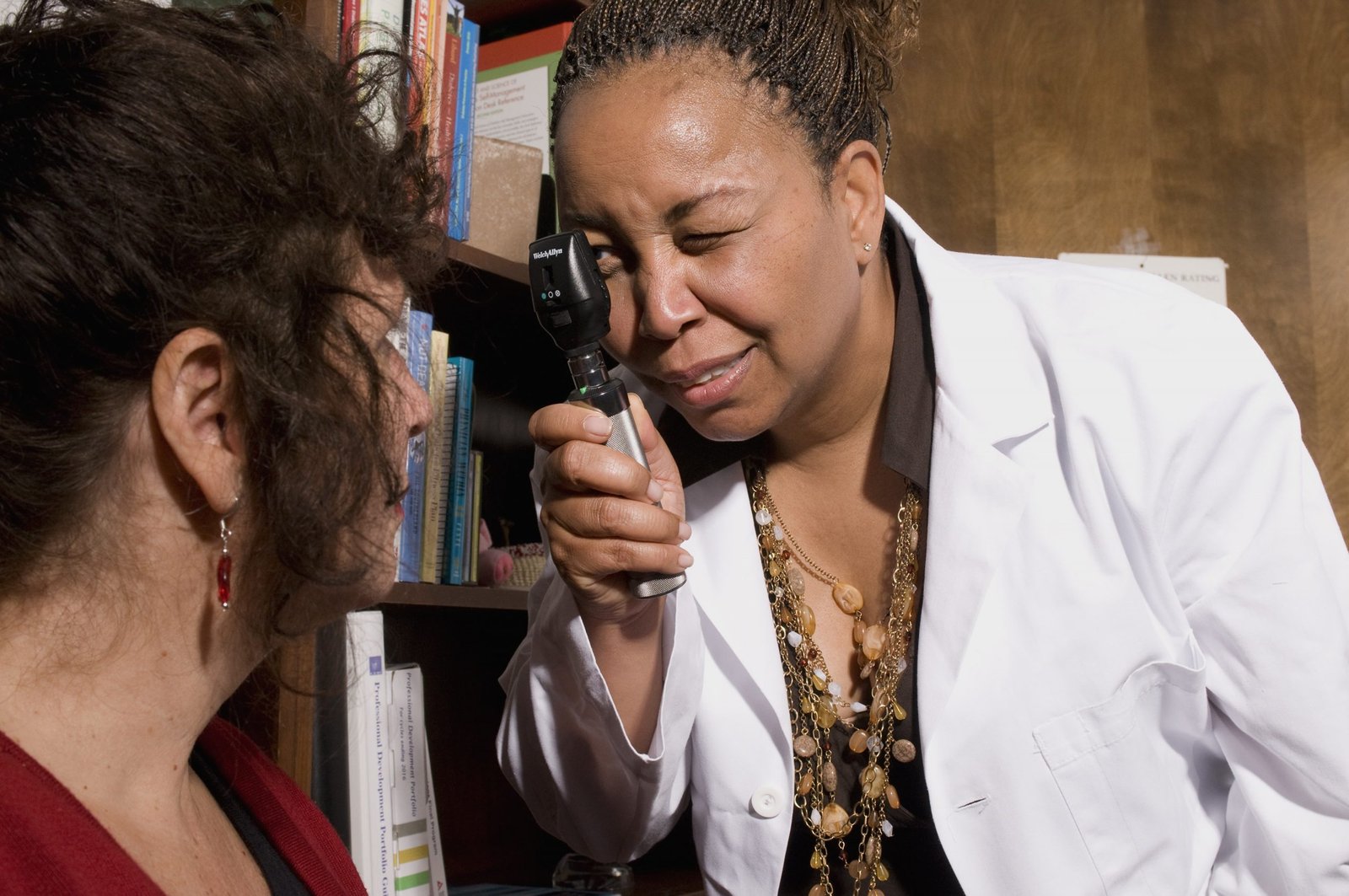

AI-Assisted Exam Monitoring

One of the most prominent uses of AI in education is remote exam proctoring. In virtual settings, manual supervision is difficult to scale and prone to inconsistency. This has made AI-assisted proctoring software an essential solution.

Using machine learning, facial recognition and behavioural tracking, these systems flag irregularities in real time—like unauthorised materials, suspicious movements or multiple people in the room. Educators receive a post-exam report to review flagged moments, allowing them to focus on genuine concerns.

Rather than replacing human judgment, AI enhances it. Tech like ai assisted proctoring software enable large-scale, secure assessments without compromising oversight.

Personalised Learning Pathways

AI supports integrity by making learning more individualised and equitable. Adaptive platforms use predictive analytics to identify where a student is struggling and adjust the content accordingly. This reduces frustration and disengagement—common drivers of academic dishonesty.

When students learn at their own pace, they’re more likely to succeed honestly. Personalised pathways offer fairer access to instruction, encouraging authentic performance and reducing the temptation to cheat.

Intelligent Plagiarism Detection

Plagiarism detection has become more sophisticated thanks to natural language processing (NLP) and semantic analysis. These AI-powered tools go beyond exact matches, identifying paraphrased or manipulated content across vast datasets.

This helps institutions maintain high standards, especially in an era where content spinning and AI-generated writing are growing concerns. Transparent reporting also helps students understand originality expectations, turning plagiarism detection into a learning opportunity rather than just a punitive measure.

Bias Reduction and Fairer Assessments

AI can uncover biases in assessment design that might disadvantage certain groups. By analysing test data across demographics, it identifies questions with inconsistent outcomes, like differential item functioning (DIF), allowing educators to revise them before exams are delivered.

It also supports consistent grading. Automated evaluation of responses—especially in objective sections—reduces human variability, helping ensure fairness in marking. This builds trust in the system and reinforces integrity at scale.

Data-Driven Early Intervention

AI is increasingly used to monitor student engagement and performance, identifying risks early. Patterns such as sudden grade drops or irregular participation can indicate that a student is struggling.

By flagging these issues, educators can step in with support before misconduct occurs. This preventative use of AI fosters a culture of care, where students are guided rather than punished, reducing the likelihood of integrity breaches.

Balancing Innovation with Ethics

The growing presence of AI in education must be matched with ethical oversight. Concerns around privacy, algorithmic bias and student consent are valid. Responsible systems prioritise transparency—clearly explaining what data is collected, how it’s used, and how decisions are made.

The best approach keeps humans in the loop. Final decisions on misconduct, grading, or student support should always involve educators. This maintains accountability and ensures AI enhances rather than replaces academic processes.